If the above questions are all answered with a YES, then, my friend, you are desperately in need of caching. Caching will help you lessen the load on your servers by doing two main things:

- It eliminates lengthy trips to the (slow by nature) database to fetch the dynamic data

- It frees precious CPU cycles needed in processing this data and preparing it for presentation.

- It needs to be fast

- It needs to be shared across multiple servers

- Authentication is required for some actions

- Page presentation changes (slightly) based on logged in user

- Most pages are shared and only a few are private for each user

The cache store part is easy, memcached seems like the most sensible choice as it achieves points 1 & 2 and is orthogonal to the other 3 requirements. So it is memcached for now.

Now, which caching technique?. Rails has several caching methods, the most famous of those is Page, Action and Fragment Caching. Greg Pollack has a great writeup on these here and here. Model caching is also an option, but it can get a bit too complicated, so I'm leaving it out for now, it can be implemented later though (layering your caches is usually a good idea)

Page caching is the fastest, but we will use the ability to authenticate (unless we do so via HTTP authentication, which I would love to, but sadly is not the case). This leaves us with action and fragment caching. Since the page contains slightly different presentation based on the logged in user (like a hello message and may be a localized datetime string) fragment caching would sound to be the better choice, no? Well, I would love to be able to use action caching after all, this way I can server whole pages without invoking the renderer at all and really avoid doing lots of string processing by Ruby.

There is a solution, if you'd just wake up and smell the coffee, we are in Web 2.0 and we should think in Web 2.0 age solutions for Web 2.0 problems. What if add little JavaScript to the page that dynamically displays the desired content based on user role. And if the content is really little, why not store it in a session cookie? Max Dunn implements a similar solution for his wiki here and thus the page is served the same with dom manipulation kicking in to do the simple mods for this specific user. Rendering of those is done on the client so no load on the server, and since the mods are really small, the client is not hurt either, and it gets to get the page much faster, it's a win win situation. Life can't be better!

No, It can!. In a content driven website, many people check a hot topic frequently, and many reread the same data they read before. In those cases, the server is sending those a cached page yes, but it is resending the same bits which the browser has in it's cache. This is a waste of bandwidth, and your mongrel will be waiting for the page transfer to finish before it can consume another request.

A better solution is to utilize client caching. Tell the browser to use the version in its cache if it is not invalidated. Just send the new data in a cookie and and let the page dynamically modify itself to adapt to the logged in user. Relying on session cookies for dynamic parts will prevent the browser from displaying stale data between two different session. But the page itself will not be fetched over the wire more than once, even for different users on the same computer.

I am using the Action Cache Plugin by Tom Fakes to add client caching capabilities to my Action Caches. Basically things go in the following manner:

- A GET request is encountered and is intercepted

- Caching headers are checked, if none exists then proceed

else send (304 NOT MODIFIED) - Action Cache is checked if it is not there then proceed

else send the cached page (200 OK) - Action processed and page content is rendered

- Page added to cache, with last-modified header information

- Response sent back to browser (200 OK + all headers)

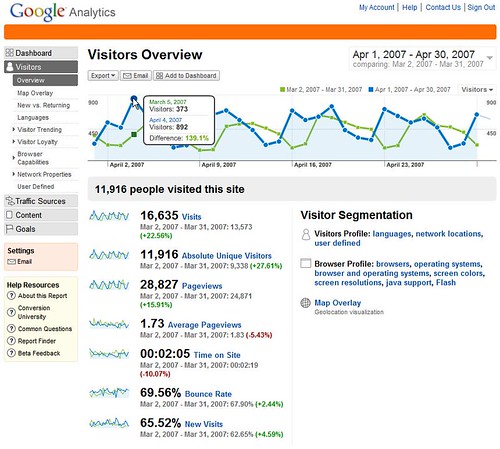

- We need to know the percentage of GET requests, which can be cached as opposed to POST, PUT and DELETE ones

- Of those GET requests, how many are repeated?

- Of those repeated GET requests, how many originate from the same client?

Happy caching